AR Accessibility | Apple ARKit vs Meta Spark vs Google ARCore Comparison

Augmented reality technology is changing how we interact with digital content, but many developers overlook the accessibility needs of users with disabilities. As AR applications become more common in education, healthcare, and entertainment, creating inclusive experiences isn’t just good practice—it’s becoming a legal requirement under ADA compliance standards and WCAG guidelines. This comparison examines how three major AR development platforms approach accessibility features and support for users with different abilities.

Current State of AR Accessibility

The augmented reality market faces significant barriers for users with disabilities. Traditional accessibility testing tools aren’t designed for spatial computing environments, leaving developers without clear methods to ensure their AR applications meet digital accessibility compliance standards. Unlike traditional websites where screen reader compatibility and keyboard navigation provide standard solutions, AR environments require entirely new approaches to inclusive design.

Research shows that AR applications often exclude users with visual, auditory, motor, and cognitive disabilities. The immersive nature of these technologies creates unique challenges that traditional web accessibility testing methods cannot address. Users with visual impairments struggle with AR interfaces that rely heavily on visual cues, while those with motor disabilities find gesture-based controls difficult or impossible to use.

Current AR accessibility standards remain underdeveloped compared to established web guidelines. The W3C’s XR Accessibility User Requirements document provides some direction, but implementation varies significantly across different development platforms. This inconsistency makes it difficult for developers to create truly accessible AR experiences that work for everyone.

Apple ARKit Accessibility Features

Apple has positioned ARKit as a leader in spatial computing accessibility through its integration with established iOS accessibility features. The platform benefits from Apple’s long-standing commitment to inclusive design, which appears clearly in how ARKit applications can work with VoiceOver and other assistive technologies.

Spatial Audio Implementation

ARKit’s spatial audio capabilities use advanced algorithms to create realistic 3D soundscapes that help users understand their environment. The platform includes built-in support for binaural audio processing, which simulates how sounds reach each ear at different times and volumes. This technology proves especially valuable for users with visual impairments who rely on audio cues for navigation and object identification.

The ARKit audio system automatically adjusts sound based on head tracking and device orientation. When users turn their heads, audio sources maintain their spatial relationship to virtual objects, creating a consistent auditory environment. This feature helps users with visual disabilities build mental maps of AR spaces and locate interactive elements through sound alone.

Apple’s AudioPlayback Controller allows developers to fade audio in and out smoothly, preventing jarring transitions that can disorient users with cognitive disabilities. The system also supports directional audio patterns that can guide users toward specific objects or interface elements through carefully placed sound cues.

Voice Control Integration

ARKit applications can integrate with iOS voice control features, allowing users to operate AR interfaces through spoken commands. This capability proves essential for users with motor disabilities who cannot perform complex gesture interactions. The platform supports custom voice commands that developers can map to specific AR functions like object selection, menu navigation, and content manipulation.

The voice recognition system works in conjunction with spatial tracking, so users can give commands like “select the red object” while looking at specific items in their environment. This multimodal approach combines speech input with gaze tracking to create more precise interaction methods for users who cannot use hand gestures effectively.

Visual Accessibility Features

ARKit supports high contrast modes and dynamic text sizing that integrate with iOS accessibility settings. Users can adjust visual elements to meet their specific needs without requiring separate configuration within each AR application. The platform also provides APIs for creating alternative visual representations of AR content.

Developers can implement audio descriptions for visual elements using ARKit’s accessibility component system. This feature allows screen readers to announce information about virtual objects, their spatial relationships, and available interactions. The system maintains synchronization between visual and audio feedback to create coherent experiences for users with varying degrees of visual ability.

Meta Spark Accessibility Capabilities

Meta Spark, formerly known as Spark AR, focuses on social AR experiences across Facebook and Instagram platforms. The platform’s approach to accessibility reflects Meta’s broader efforts to make virtual and augmented reality more inclusive for users with disabilities.

Spatial Audio Features

Meta Spark includes spatial audio tools that create immersive soundscapes for AR filters and effects. The platform uses eight-channel audio processing to simulate realistic sound positioning, allowing developers to place audio sources at specific locations in 3D space. This technology helps users understand the spatial relationships between virtual objects and their real environment.

The platform’s spatial audio implementation includes head tracking functionality that adjusts sound perspective as users move. When someone turns their head, audio sources maintain their apparent location, creating a consistent auditory experience. This feature particularly benefits users with visual impairments who rely on spatial audio cues for orientation and navigation.

Meta Spark’s audio system supports dynamic range adjustment and volume balancing to accommodate users with hearing impairments. Developers can implement variable audio settings that allow users to customize sound levels based on their specific needs and hearing abilities.

Voice Command Support

Meta Quest devices include voice control capabilities that extend to Spark AR applications. Users can activate features, navigate menus, and control AR content through spoken commands. The platform supports natural language processing that interprets user intent even when commands don’t match exact phrases.

The voice control system includes accessibility shortcuts that allow users to quickly access important functions. For example, users can say “show menu” or “help” to access assistance features without complex gesture interactions. This functionality proves especially valuable for users with motor disabilities who struggle with hand-based controls.

Alternative Interaction Methods

Meta Spark supports multiple interaction methods beyond traditional touch and gesture controls. The platform includes eye tracking capabilities for supported devices, allowing users to select objects and navigate interfaces through gaze-based input. This feature provides an important alternative for users who cannot perform precise hand movements.

The platform also supports switch control integration for users with severe motor disabilities. External switches can trigger AR interactions, making it possible for users with limited mobility to participate in augmented reality experiences. This accommodation addresses the needs of users who rely on assistive technology for computer interaction.

Google ARCore Accessibility Implementation

Google ARCore takes a different approach to accessibility by focusing on Android’s built-in accessibility services and machine learning capabilities. The platform leverages Google’s expertise in AI and accessibility tools to create more inclusive AR experiences.

Spatial Audio Capabilities

ARCore includes spatial audio features through integration with Google’s audio processing libraries. The platform supports 3D positioned audio sources that maintain spatial relationships as users move through AR environments. Developers can create soundscapes that help users understand object locations and environmental layout through audio cues.

The ARCore audio system includes support for ambisonic audio formats that create realistic 3D sound fields. This technology simulates how sound reflects off surfaces and travels through space, providing users with accurate spatial information about their environment. The feature proves particularly valuable for accessibility applications that help users with visual impairments navigate physical spaces.

Google’s spatial audio implementation includes noise reduction algorithms that improve speech recognition in noisy environments. This capability helps ensure that voice commands and audio feedback remain clear and understandable even in challenging acoustic conditions.

Voice Navigation Features

ARCore applications can integrate with Google Assistant and Android’s voice recognition services. Users can control AR content through natural language commands, making applications more accessible to users with motor disabilities. The platform supports context-aware voice commands that understand user intent based on what they’re looking at or interacting with.

A notable example is the development of AR navigation applications specifically designed for users with visual impairments. These applications use ARCore’s tracking capabilities combined with voice commands to provide turn-by-turn navigation guidance through indoor spaces. Users can ask for directions, receive obstacle warnings, and get descriptions of their surroundings through spoken interaction.

Machine Learning Integration

ARCore benefits from Google’s advanced machine learning capabilities for object recognition and scene understanding. The platform can automatically identify and describe objects in the user’s environment, providing valuable information for users with visual impairments. This feature works in real-time to announce relevant details about nearby objects and potential obstacles.

The platform’s depth sensing capabilities enable real-time obstacle detection that can warn users about potential hazards. This technology proves especially important for navigation applications designed to help users with visual impairments move safely through unfamiliar environments.

Spatial Audio Comparison Across Platforms

Each platform takes a distinct approach to spatial audio implementation, reflecting different priorities and technical capabilities. Apple ARKit emphasizes integration with existing iOS accessibility features, while Meta Spark focuses on social AR experiences, and Google ARCore leverages machine learning for environmental understanding.

ARKit’s spatial audio system provides the most seamless integration with established assistive technologies. The platform’s binaural audio processing creates realistic 3D soundscapes that work effectively with VoiceOver and other screen reading technologies. This integration allows users to hear both AR content and system feedback without conflicts or confusion.

Meta Spark’s eight-channel spatial audio offers high-quality immersive experiences but requires developers to implement accessibility features manually. The platform provides powerful tools for creating realistic soundscapes, but lacks the automatic integration with assistive technologies that ARKit offers.

Google ARCore’s approach emphasizes environmental understanding and context-aware audio processing. The platform’s machine learning capabilities enable automatic audio descriptions and obstacle detection, but spatial audio features require more technical implementation compared to the other platforms.

Alternative Interaction Methods for Motor Disabilities

Traditional AR interactions rely heavily on precise hand gestures and touch controls that can exclude users with motor disabilities. Each platform offers different solutions for alternative interaction methods, with varying degrees of accessibility support.

Voice Control Implementation

Voice commands provide the most universal alternative interaction method across all three platforms. ARKit applications can integrate with iOS voice control features, allowing users to perform complex actions through spoken commands. The system supports custom voice shortcuts that developers can map to specific AR functions.

Meta Spark applications benefit from Quest device voice recognition capabilities. Users can navigate menus, select objects, and trigger actions through natural language commands. The platform’s voice processing includes contextual understanding that interprets user intent even when commands aren’t perfectly phrased.

ARCore applications can leverage Google Assistant integration for voice control functionality. The platform supports both simple commands and complex multi-step interactions through spoken input. Google’s natural language processing capabilities enable more flexible voice interaction compared to rigid command structures.

Gaze-Based Controls

Eye tracking provides another important alternative for users who cannot perform hand gestures effectively. Meta Spark includes gaze-based selection capabilities for compatible devices, allowing users to activate objects and navigate interfaces through eye movements.

ARKit supports gaze tracking through device cameras and machine learning algorithms. Developers can implement eye-based selection and navigation features that work alongside voice commands to create multimodal interaction systems. This combination provides backup options when one interaction method fails or becomes unavailable.

Google ARCore offers gaze tracking through its machine learning frameworks. The platform can detect where users are looking and use this information to predict their interaction intent. This capability enables hands-free selection and navigation that works well for users with motor disabilities.

Switch Control Integration

For users with severe motor disabilities, switch control provides essential access to digital interfaces. ARKit applications can integrate with iOS switch control features, allowing external switches to trigger AR interactions. This capability makes augmented reality accessible to users who rely on assistive technology for all computer interaction.

Meta Spark supports switch control through Quest device accessibility settings. External switches can replace gesture controls, making it possible for users with limited mobility to participate in AR experiences. The platform allows developers to map switch inputs to any AR function or navigation action.

ARCore applications can integrate with Android’s switch access features. The platform supports external switch devices that can trigger selections, navigate menus, and control AR content. This functionality ensures that users with motor disabilities aren’t excluded from augmented reality experiences.

Visual Accessibility Features

Users with visual impairments face unique challenges in AR environments that rely heavily on visual information. Each platform addresses these challenges through different approaches to alternative feedback and interface design.

Screen Reader Compatibility

ARKit provides the strongest screen reader integration through its established relationship with VoiceOver. AR applications can announce object locations, describe available interactions, and provide spatial information through synthesized speech. The platform maintains synchronization between visual and audio feedback to create coherent experiences.

Meta Spark applications require manual implementation of screen reader features. Developers must add audio descriptions and voice feedback to make AR content accessible to users with visual impairments. The platform provides APIs for speech synthesis but doesn’t include automatic accessibility features.

Google ARCore can integrate with Android’s TalkBack screen reader through custom implementation. The platform supports audio descriptions and voice feedback, but requires developers to design these features into their applications. ARCore’s machine learning capabilities can automatically generate descriptions of detected objects and scenes.

High Contrast and Text Sizing

Visual accessibility features help users with low vision and color blindness participate in AR experiences. ARKit automatically inherits iOS accessibility settings including high contrast modes and dynamic text sizing. Users don’t need separate configuration within AR applications to access these features.

Meta Spark includes color correction features specifically designed for users with color blindness. The platform can adjust color palettes and contrast ratios to improve visibility for users with different types of color vision deficiencies. These features work across all Meta AR applications and filters.

ARCore applications can access Android’s accessibility settings for contrast and text sizing. The platform supports customizable visual themes that adapt to user preferences and vision needs. Developers can implement automatic adjustments that respond to system accessibility settings.

Audio Descriptions

Providing audio descriptions for visual AR content ensures that users with visual impairments understand what’s happening in their environment. ARKit’s accessibility component system makes it easy for developers to add voice descriptions for virtual objects and their spatial relationships.

Meta Spark requires manual implementation of audio descriptions, but provides text-to-speech capabilities that can announce information about AR content. Developers must design these features into their applications rather than relying on automatic accessibility support.

Google ARCore’s machine learning capabilities can automatically generate audio descriptions for detected objects and scenes. The platform can identify items in the user’s environment and provide spoken descriptions through synthesized speech. This feature proves especially valuable for navigation and environmental awareness applications.

Testing and Compliance Considerations

Accessibility testing for AR applications requires new approaches that traditional web accessibility testing tools cannot address. Developers need specialized methods to ensure their AR experiences meet ADA compliance requirements and WCAG guidelines adapted for spatial computing environments.

Automated Testing Limitations

Current automated accessibility testing tools like axe-core and WAVE work well for traditional web content but struggle with AR interfaces. These tools cannot evaluate spatial audio placement, gesture accessibility, or voice control functionality. Developers must supplement automated testing with manual evaluation methods specifically designed for AR environments.

New accessibility testing tools are emerging specifically for XR applications, but coverage remains limited compared to web accessibility scanners. Organizations developing AR applications need to invest in specialized testing approaches that address the unique challenges of spatial computing accessibility.

Manual Testing Approaches

Manual accessibility testing for AR applications requires involving users with disabilities throughout the development process. Traditional usability testing methods must adapt to account for spatial interactions, alternative input methods, and assistive technology compatibility.

Testing should include users with different types of disabilities to identify barriers that automated tools miss. Visual impairments, hearing loss, motor disabilities, and cognitive challenges each create different accessibility requirements in AR environments.

Compliance Standards

WCAG guidelines provide some direction for AR accessibility, but standards specifically designed for spatial computing remain under development. Organizations must interpret existing accessibility requirements in the context of AR technology while preparing for more specific standards that may emerge.

The European Accessibility Act’s enforcement in 2025 may include AR applications, making compliance testing even more important for organizations serving European markets. Penalties for accessibility violations can reach significant amounts, making proactive testing a business necessity rather than just good practice.

Future Outlook for AR Accessibility

The future of AR accessibility depends on continued collaboration between platform developers, accessibility experts, and users with disabilities. Machine learning and artificial intelligence offer promising opportunities to automate many accessibility features that currently require manual implementation.

AI-Powered Accessibility Features

Artificial intelligence can automatically generate audio descriptions, detect potential accessibility barriers, and adapt interfaces based on user needs. Future AR platforms may include built-in accessibility features that work without requiring developers to implement them manually.

Voice recognition and natural language processing continue improving, making voice control more reliable and flexible. These advances will benefit all users but prove especially important for people with motor disabilities who rely on alternative interaction methods.

Standards Development

Industry standards for AR accessibility continue evolving through organizations like the W3C and XR Association. Future guidelines will provide clearer direction for developers creating accessible AR experiences while ensuring consistency across different platforms and applications.

Legal requirements for digital accessibility are expanding to include emerging technologies. Organizations developing AR applications should prepare for more specific accessibility requirements that may apply to spatial computing environments.

Platform Integration

Better integration between AR platforms and existing assistive technologies will reduce the burden on individual developers to implement accessibility features. Future updates may include automatic compatibility with screen readers, voice control systems, and other assistive tools.

Cross-platform accessibility standards could ensure that users with disabilities have consistent experiences regardless of which AR development platform creators choose. This standardization would benefit both users and developers by reducing the complexity of creating accessible AR applications.

Using Automated Tools for Quick Insights (Accessibility-Test.org Scanner)

Automated testing tools provide a fast way to identify many common accessibility issues. They can quickly scan your website and point out problems that might be difficult for people with disabilities to overcome.

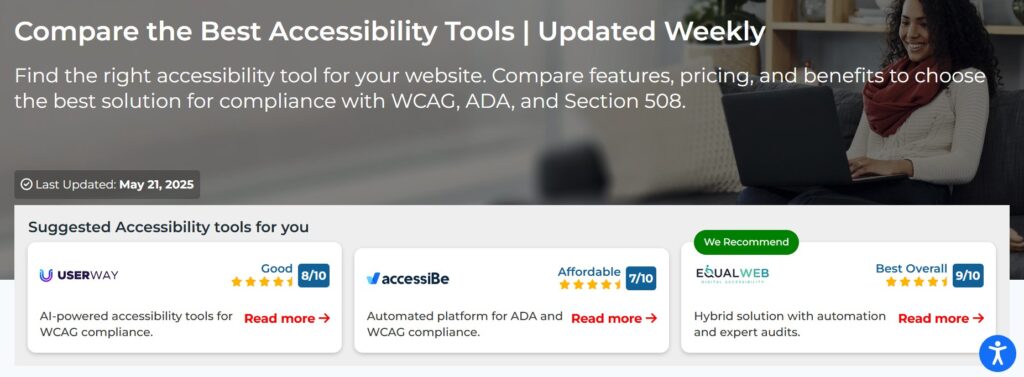

Visit Our Tools Comparison Page!

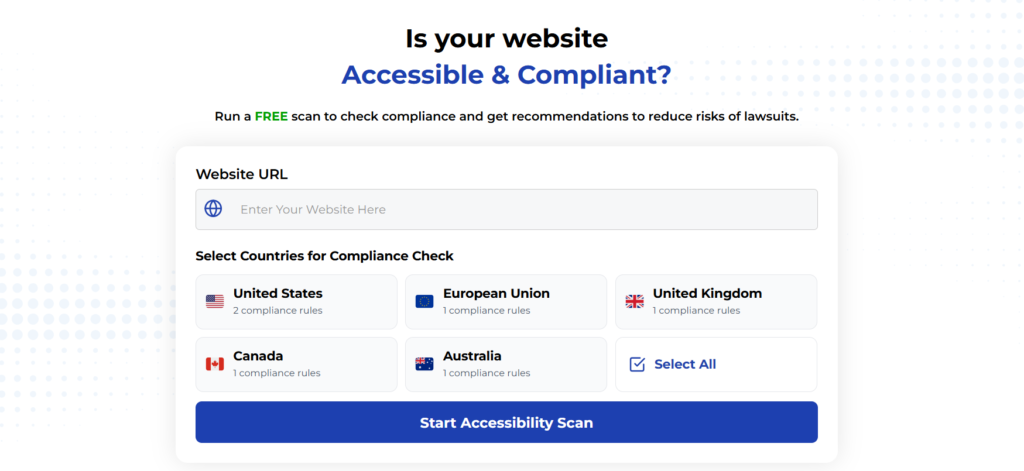

Run a FREE scan to check compliance and get recommendations to reduce risks of lawsuits

Final Thoughts

Apple ARKit currently offers the strongest accessibility features through its integration with iOS accessibility services and established assistive technologies. The platform’s spatial audio capabilities, voice control integration, and screen reader compatibility make it the most accessible choice for developers creating inclusive AR experiences.

Meta Spark provides powerful spatial audio tools and voice control features but requires more manual implementation of accessibility features. The platform’s focus on social AR experiences creates opportunities for inclusive design, but developers must invest additional effort to ensure their applications work for users with disabilities.

Google ARCore leverages machine learning capabilities to provide automatic environmental understanding and object recognition that benefits users with visual impairments. The platform’s integration with Android accessibility services offers good potential for accessible AR development, but requires more technical implementation compared to ARKit.

All three platforms show promise for creating accessible AR experiences, but none fully address the accessibility challenges that users with disabilities face in spatial computing environments. Future development should focus on automatic accessibility features, better integration with assistive technologies, and clearer standards for inclusive AR design.

Organizations developing AR applications should choose platforms based on their specific accessibility requirements and target user needs. Regular testing with users who have disabilities remains essential regardless of which development platform teams select. As AR technology continues evolving, maintaining focus on inclusive design will ensure that these powerful tools benefit everyone rather than creating new barriers for people with disabilities.

Want More Help?

Try our free website accessibility scanner to identify heading structure issues and other accessibility problems on your site. Our tool provides clear recommendations for fixes that can be implemented quickly.

Join our community of developers committed to accessibility. Share your experiences, ask questions, and learn from others who are working to make the web more accessible.