ChatGPT vs Claude vs Gemini

The landscape of accessibility testing has shifted dramatically with artificial intelligence entering the picture. While traditional automated tools can only detect around 30-40% of accessibility issues, AI-powered solutions are pushing that number higher—with some claiming detection rates of up to 57% by volume. This creates an interesting question for accessibility professionals: which AI assistant actually delivers the most accurate and helpful accessibility testing support? After extensive testing with real-world scenarios, ChatGPT emerges as the most versatile option for accessibility testing workflows, while Claude excels at ethical considerations and detailed technical analysis, and Gemini shines in multimodal accessibility features but lacks depth in WCAG-specific guidance.

Why AI Tools Matter for Accessibility Testing

The traditional approach to accessibility testing has always been a mix of automated scans and manual audits. But here’s the reality—automated testing tools provide a fast way to identify many common accessibility issues, yet they miss the majority of barriers that affect real users. That’s where AI tools step in, offering something between pure automation and expensive manual testing.

What makes AI different from standard accessibility scanners? These tools can understand context in ways that rule-based systems simply can’t. When you paste a screenshot into Claude and ask about potential accessibility barriers, it doesn’t just look for missing alt text—it considers whether the visual hierarchy makes sense, if color is being used as the only way to convey information, and whether interactive elements would be confusing for keyboard users.

The numbers tell an interesting story about where this technology is heading. According to recent data from Deque Systems, AI-enhanced testing capabilities now allow automated detection of up to 57% of accessibility issues by volume, with projections suggesting this could reach nearly 70% by the end of 2025. That’s still not perfect, but it’s a significant improvement over traditional automated tools.

The Current State of Automated Testing

Before diving into specific AI tools, it’s worth understanding what traditional automated testing can and can’t do. Tools like WAVE can quickly scan your website and point out problems that might be difficult for people with disabilities to overcome. These tools excel at catching technical violations—missing form labels, insufficient color contrast, improper heading structures.

But automated testing has clear limitations. It can’t tell you if your website actually makes sense to someone using a screen reader. It won’t catch situations where all the technical requirements are met but the user experience is still confusing or frustrating. Most importantly, it can’t evaluate whether your content truly serves people with different cognitive abilities or learning differences.

This is where AI tools become particularly valuable. They can bridge some of these gaps by providing more nuanced analysis and helping with tasks that require understanding context and meaning.

Where Human Expertise Still Rules

While AI tools are getting more sophisticated, they’re not replacing human accessibility experts anytime soon. Research shows that even the most advanced AI tools aren’t reliable enough to fully conduct a website accessibility audit. They make errors, struggle with complex multi-layered requests, and can’t match the speed and convenience of specialized testing tools for basic scans.

The real value of AI in accessibility testing lies in augmenting human expertise rather than replacing it. These tools work best when used by people who already understand accessibility principles and can spot when the AI gets something wrong.

ChatGPT for Accessibility Testing

ChatGPT has become the go-to AI assistant for many accessibility professionals, and there are good reasons for its popularity. The tool’s strength lies in its versatility and ability to handle a wide range of accessibility-related tasks within a single conversation.

Alt Text Generation Capabilities

One of the most practical applications of ChatGPT in accessibility work is generating alt text for images. Many professionals have started using it as a starting point for alt text creation, though they always review and edit the results. The tool is particularly good at identifying what’s important in complex screenshots or technical diagrams.

For example, when you upload a screenshot of a web interface, ChatGPT can usually distinguish between decorative elements and meaningful content. It understands that users don’t need to know about every button and menu item visible in a screenshot—they need to understand the key message or functionality being demonstrated.

The quality varies depending on image complexity. ChatGPT handles simple product photos and basic interface screenshots well, but struggles with charts, graphs, or images that contain multiple layers of information. It also sometimes includes unnecessary details or misses important context that would help screen reader users understand why the image matters.

Code Review and WCAG Guidance

ChatGPT can review code snippets for common accessibility issues, though its accuracy depends heavily on how you frame your questions. When you ask specific questions about WCAG compliance, it generally provides accurate information about current standards. It’s particularly helpful for explaining accessibility concepts in plain language.

However, ChatGPT has some notable limitations when it comes to technical accuracy. It sometimes provides outdated information about accessibility standards or suggests solutions that don’t fully address the underlying issues. The tool works best when used by someone who can verify its suggestions against actual WCAG documentation.

One area where ChatGPT excels is in explaining accessibility concepts to team members who might not have technical backgrounds. It can break down complex WCAG success criteria into actionable steps and help teams understand why certain accessibility requirements matter.

Strengths and Limitations

ChatGPT’s biggest strength in accessibility testing is its conversational nature. You can have an ongoing dialogue about accessibility challenges, refining your questions based on previous answers. This makes it particularly useful for troubleshooting specific problems or exploring different approaches to accessibility implementation.

The tool also integrates well into existing workflows. Many accessibility professionals use it to draft emails explaining accessibility issues to developers, create user stories that incorporate accessibility requirements, or generate testing scenarios for manual audits.

But ChatGPT has clear limitations that users need to understand. It can’t actually test websites directly—you need to provide screenshots, code snippets, or detailed descriptions of what you’re working with. It also lacks access to real-time information about evolving accessibility standards or newly released assistive technologies.

Claude’s Approach to Accessibility

Claude takes a different approach to accessibility testing, one that emphasizes detailed analysis and ethical considerations. Developed by Anthropic with a focus on responsible AI, Claude often provides more nuanced responses when dealing with accessibility questions that involve human judgment.

Technical Documentation Analysis

Claude excels at analyzing technical documentation and identifying potential accessibility barriers in written content. When you paste lengthy WCAG documentation or accessibility reports into Claude, it can summarize key points and highlight areas that need attention.

The tool is particularly strong at understanding the relationship between different accessibility requirements. For instance, when discussing form accessibility, Claude can explain how proper labeling connects to keyboard navigation, which relates to screen reader compatibility, which impacts overall usability for people with disabilities.

Claude also does well with analyzing existing accessibility statements or audit reports. It can identify gaps in coverage, suggest improvements to language, and help organizations understand their current accessibility posture more clearly.

Ethical AI and Accessibility Standards

What sets Claude apart is its emphasis on the ethical dimensions of accessibility work. The tool consistently frames accessibility not just as a compliance requirement but as a matter of human rights and inclusion. This perspective can be valuable when trying to build organizational buy-in for accessibility initiatives.

Claude is also more likely to acknowledge the limitations of AI in accessibility testing. It regularly reminds users that automated tools can’t replace human judgment and that real accessibility testing requires input from people with disabilities.

This ethical framework extends to how Claude approaches accessibility recommendations. Rather than just providing technical solutions, it often discusses the user impact of different approaches and helps teams think through the human consequences of their design decisions.

Real-World Performance

In practical testing scenarios, Claude tends to provide more detailed and thoughtful responses than ChatGPT, but this can sometimes make it less efficient for quick questions. The tool shines when you need deep analysis of complex accessibility challenges or when you’re trying to understand the broader implications of accessibility decisions.

Claude is particularly good at identifying accessibility issues that involve cognitive load or information architecture. While it can’t test these things directly, it can analyze content structure and identify potential barriers for users with cognitive disabilities or attention differences.

However, Claude can be more conservative in its recommendations, sometimes suggesting more extensive changes than might be strictly necessary for WCAG compliance. This thoroughness can be valuable for organizations aiming for high-quality accessibility, but might frustrate teams working under tight deadlines.

Google Gemini’s Accessibility Features

Google Gemini brings unique capabilities to accessibility testing, particularly through its integration with Google’s existing accessibility ecosystem and its multimodal analysis features. The tool has made significant strides in 2025, especially in areas related to vision and hearing accessibility.

Integration with Google Accessibility Tools

Gemini’s biggest advantage in accessibility testing comes from its connection to Google’s broader accessibility infrastructure. The tool can provide insights that connect directly to features like TalkBack, Chrome’s adaptive text zoom, and other Google accessibility services.

This integration means Gemini can offer more specific guidance about how accessibility features will actually work in real-world scenarios. For example, when discussing screen reader compatibility, Gemini can reference how specific implementations will behave with TalkBack’s Gemini-powered features.

The tool also benefits from Google’s extensive data about how people with disabilities actually use technology. This real-world usage data helps Gemini provide more practical recommendations rather than just theoretical compliance guidance.

Multimodal Analysis Capabilities

Where Gemini really stands out is in its ability to analyze multiple types of content simultaneously. The tool can examine images, text, and interface elements together to provide more holistic accessibility assessments.

This capability is particularly valuable for evaluating complex interfaces or multimedia content. Gemini can identify situations where multiple accessibility barriers combine to create significant usability problems, even when each individual element might technically meet WCAG requirements.

The tool’s vision capabilities have improved significantly in 2025, making it particularly good at identifying visual accessibility issues like poor color contrast or confusing visual hierarchies. It can analyze screenshots and provide specific suggestions for improving visual accessibility.

TalkBack and Vision Enhancements

Gemini’s integration with TalkBack represents a significant advancement in AI-powered accessibility features. The tool can simulate how content will be experienced by screen reader users and identify potential navigation challenges or confusing interactions.

This capability extends to analyzing how dynamic content updates will be announced to screen reader users. Gemini can review interface mockups or descriptions and predict where users might encounter difficulties with live regions or status updates.

However, these advanced features are primarily available within Google’s ecosystem. Organizations using other platforms or assistive technologies may not be able to take full advantage of Gemini’s specialized accessibility capabilities.

Head-to-Head Performance Comparison

After extensive testing with real accessibility scenarios, clear differences emerge between these three AI tools. Each has distinct strengths and weaknesses that make them more or less suitable for different types of accessibility work.

Alt Text Quality Assessment

For alt text generation, ChatGPT provides the most consistently usable results across different image types. The tool strikes a good balance between thoroughness and brevity, usually capturing the essential information without overwhelming users with unnecessary details.

Claude tends to generate longer, more detailed alt text that sometimes includes interpretive elements. While this can be valuable for complex images, it often requires editing to meet best practices for concise alt text. Claude is particularly good at understanding the context of images within larger documents or presentations.

Gemini excels with images that contain text or technical diagrams. Its vision capabilities allow it to accurately transcribe text within images and identify important structural elements like charts or graphs. However, it sometimes struggles with more subjective aspects of alt text, like determining what emotional tone or atmosphere an image conveys.

WCAG Compliance Accuracy

When it comes to providing accurate information about WCAG requirements, Claude generally delivers the most reliable guidance. The tool’s responses typically include appropriate caveats about the complexity of accessibility standards and the need for human judgment in implementation.

ChatGPT provides accessible explanations of WCAG requirements but sometimes oversimplifies complex success criteria. It’s particularly good at explaining why certain requirements exist and how they benefit users with disabilities, but may miss nuances in technical implementation.

Gemini’s WCAG guidance is somewhat inconsistent, depending heavily on the specific area of accessibility being discussed. The tool is strongest when discussing visual accessibility requirements but less reliable for complex interaction patterns or cognitive accessibility considerations.

User Experience and Interface

ChatGPT offers the most user-friendly experience for accessibility testing workflows. Its conversational interface makes it easy to ask follow-up questions, refine requests, and explore different aspects of accessibility challenges. The tool integrates well with existing accessibility workflows and doesn’t require learning new interaction patterns.

Claude provides a more structured experience that some accessibility professionals prefer for detailed analysis work. The tool’s longer, more thoughtful responses can be valuable when working through complex accessibility decisions, though they may slow down rapid testing scenarios.

Gemini’s interface works well for quick accessibility checks but can be frustrating for more complex analysis. The tool’s integration with Google services is valuable for teams already using Google Workspace, but may be less useful for organizations using other platforms.

Practical Testing Scenarios

To really understand how these AI tools perform in accessibility testing, it’s important to look at specific scenarios that accessibility professionals encounter regularly. Here’s how each tool handles common testing challenges.

Form Accessibility Analysis

Forms represent one of the most critical areas for accessibility testing, and each AI tool approaches form analysis differently. ChatGPT excels at identifying missing form labels and explaining the relationship between labels and form controls in language that developers can easily understand.

When you describe a form structure to ChatGPT, it can quickly identify potential keyboard navigation issues and suggest improvements for error messaging. The tool is particularly good at explaining how form validation should work for screen reader users.

Claude takes a more systematic approach to form analysis, often providing detailed checklists of accessibility requirements for different types of form elements. The tool is excellent at identifying subtle issues like forms that might be technically accessible but still confusing for users with cognitive disabilities.

Gemini’s strength in form analysis lies in its ability to understand visual form layouts. When you provide screenshots of forms, Gemini can identify visual accessibility issues like poor contrast in form labels or confusing visual groupings of related fields.

Color Contrast Evaluation

Color contrast represents an area where AI tools can provide valuable assistance, though none can replace actual contrast measurement tools. ChatGPT can identify obvious contrast problems when you describe color combinations, but it can’t perform precise contrast calculations.

Claude is more likely to recommend using dedicated contrast checking tools rather than relying on visual assessment. The tool provides good guidance about the different contrast requirements for various types of text and interface elements.

Gemini’s vision capabilities allow it to analyze screenshots for potential contrast issues, though its assessments should always be verified with proper contrast measurement tools. The tool is particularly good at identifying situations where color is being used as the only way to convey important information.

Navigation Structure Review

When evaluating website navigation for accessibility, each AI tool brings different strengths to the analysis. ChatGPT excels at explaining how navigation structures should work for keyboard users and identifying potential skip link requirements.

Claude provides thorough analysis of navigation hierarchies and can identify situations where navigation might be technically accessible but still difficult for users with cognitive disabilities to understand. The tool is particularly good at evaluating the logical flow of navigation elements.

Gemini can analyze navigation screenshots to identify visual hierarchy issues and suggest improvements for users who rely on visual cues for navigation. However, it’s less effective at evaluating the logical structure of navigation for screen reader users.

Cost and Accessibility Considerations

The financial aspects of using AI tools for accessibility testing vary significantly between options, and teams need to consider both direct costs and the broader implications for their accessibility workflows.

Pricing Models for Teams

ChatGPT offers several pricing tiers that can work for different team sizes and usage patterns. The free tier provides substantial capability for occasional accessibility testing support, while paid plans offer faster response times and access to more advanced features. For accessibility teams that need regular AI assistance, the professional plans typically provide good value.

Claude’s pricing structure focuses more on usage-based billing, which can be cost-effective for teams that use AI tools intensively for accessibility analysis. The tool’s longer, more detailed responses mean you might get more value per interaction, but could also result in higher costs for extensive use.

Gemini’s integration with Google Workspace can provide cost advantages for organizations already using Google’s business tools. However, accessing the most advanced accessibility features may require specific Google service subscriptions that might not be cost-effective for smaller teams.

API Integration Options

For organizations wanting to integrate AI accessibility testing into their development workflows, API access becomes crucial. ChatGPT’s API offers the most flexibility for custom integrations, allowing teams to build accessibility checking into their existing development tools.

Claude’s API provides good capabilities for organizations that want to integrate detailed accessibility analysis into their quality assurance processes. The tool’s strength in document analysis makes it particularly valuable for teams that need to review accessibility documentation or compliance reports regularly.

Gemini’s API integration works well for teams already using Google Cloud services, but may require additional infrastructure investment for organizations using other cloud platforms.

Learning Curves and Training

Different AI tools require different levels of expertise to use effectively for accessibility testing. ChatGPT has the gentlest learning curve, making it accessible to team members who might not have extensive accessibility experience but need to incorporate accessibility considerations into their work.

Claude requires more familiarity with accessibility concepts to use effectively, but provides more educational value for teams that want to build internal accessibility expertise. The tool’s detailed explanations can help team members understand not just what to do, but why specific accessibility requirements matter.

Gemini’s effectiveness depends heavily on familiarity with Google’s accessibility ecosystem. Teams already using Google’s accessibility tools will find it easier to leverage Gemini’s capabilities, while others may need additional training.

The Future of AI in Accessibility Testing

Looking ahead, the role of AI in accessibility testing continues to evolve rapidly. Each of these tools is developing new capabilities that could significantly change how accessibility professionals approach their work.

Emerging Capabilities

ChatGPT is expanding its ability to understand and analyze multimedia content, which could make it more valuable for testing video accessibility and complex interactive interfaces. The tool’s integration capabilities are also improving, potentially allowing for more seamless incorporation into existing accessibility testing workflows.

Claude’s development focuses on improving the accuracy and reliability of its accessibility guidance. Anthropic’s emphasis on responsible AI development means Claude is likely to become more conservative and accurate in its accessibility recommendations over time.

Gemini’s future development appears focused on deeper integration with Google’s accessibility ecosystem and improved multimodal analysis capabilities. The tool’s ability to simultaneously analyze visual, audio, and textual content could make it particularly valuable for testing complex accessibility scenarios.

Integration with Testing Workflows

The most significant changes in AI accessibility testing are likely to come from better integration with existing testing tools and workflows. Rather than using AI tools in isolation, accessibility professionals are beginning to incorporate them into broader testing strategies that combine automated scanning, AI analysis, and human expertise.

This integration approach recognizes that AI tools are most valuable when they augment human capabilities rather than trying to replace them. The future likely holds more sophisticated workflows where AI tools handle initial analysis and flag potential issues for human review, rather than attempting to provide definitive accessibility assessments.

The key to successful AI integration in accessibility testing lies in understanding what each tool does well and designing workflows that leverage those strengths while compensating for limitations through human expertise and specialized testing tools.

Using Automated Tools for Quick Insights (Accessibility-Test.org Scanner)

Automated testing tools provide a fast way to identify many common accessibility issues. They can quickly scan your website and point out problems that might be difficult for people with disabilities to overcome.

Visit Our Tools Comparison Page!

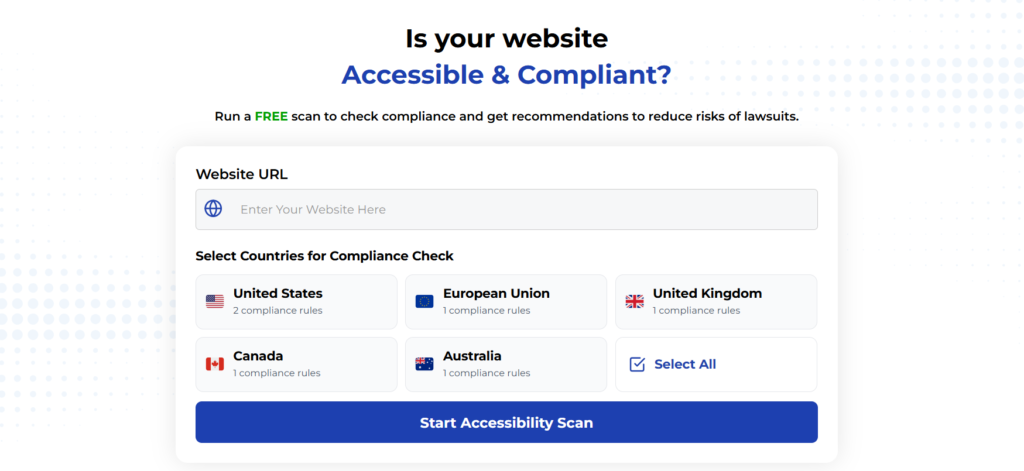

Run a FREE scan to check compliance and get recommendations to reduce risks of lawsuits

Final Thoughts

After extensive testing across multiple accessibility scenarios, ChatGPT emerges as the most versatile choice for most accessibility testing workflows. Its balance of usability, accuracy, and integration capabilities makes it valuable for teams with varying levels of accessibility expertise. The tool’s conversational interface and ability to handle diverse accessibility questions make it particularly useful for day-to-day accessibility work.

Claude serves as an excellent choice for organizations that prioritize thorough analysis and ethical considerations in their accessibility work. While it may be slower for quick questions, its detailed responses and systematic approach to accessibility challenges provide significant value for complex projects or teams building internal accessibility expertise.

Gemini offers unique advantages for teams already invested in Google’s ecosystem, particularly those working on applications that integrate with Google’s accessibility services. Its multimodal capabilities show promise for future accessibility testing scenarios, though current limitations in WCAG-specific guidance may limit its usefulness for compliance-focused work.

The reality is that no single AI tool currently provides everything accessibility professionals need. The most effective approach combines these tools strategically, using each for its particular strengths while maintaining human oversight and verification. As these tools continue to evolve, they’re becoming valuable additions to accessibility testing workflows rather than replacements for human expertise.

Want to see how your website currently performs on accessibility standards? Try our free website accessibility scanner to identify immediate improvement opportunities and discover how AI-powered analysis can enhance your accessibility testing process.

Want More Help?

Try our free website accessibility scanner to identify heading structure issues and other accessibility problems on your site. Our tool provides clear recommendations for fixes that can be implemented quickly.

Join our community of developers committed to accessibility. Share your experiences, ask questions, and learn from others who are working to make the web more accessible.