Amazon Alexa vs Google Assistant

Voice interfaces have become essential accessibility tools that remove barriers for people with disabilities while changing how everyone interacts with technology. Amazon Alexa and Google Assistant each offer distinct advantages for users with different accessibility needs, from motor impairments to vision loss. This analysis examines their specific features, integration capabilities, and practical implementation strategies to help organizations and individuals choose the most suitable voice platform for their accessibility requirements.

Voice Technology’s Impact on Digital Accessibility

Voice assistants now serve as primary accessibility tools rather than simple convenience features. These platforms enable users with diverse disabilities to control their environments, access information, and communicate more effectively than traditional input methods allow. The shift from optional convenience to essential accessibility tools has transformed how people with motor impairments, visual disabilities, and cognitive challenges interact with digital systems.

Recent advancements in voice technology have introduced multimodal functionality that combines voice input with visual or tactile interactions. This integration creates more flexible user experiences by accommodating different abilities and preferences simultaneously. Users can verbally request actions while visually confirming their completion, creating a balanced approach that serves various user demographics effectively.

The global reach of voice technology has expanded significantly through enhanced language support, making accessibility features available to broader populations. Voice assistants now recognize numerous languages and dialects, providing critical support to users from varying cultural backgrounds as they engage with technology. This development particularly benefits communities where traditional assistive technologies may be less available or culturally appropriate.

Personalization represents a defining characteristic of modern voice technologies. Through artificial intelligence and machine learning, these systems tailor responses and functionalities based on individual user habits and preferences. This customization ensures that people with unique accessibility needs receive relevant support while improving overall user engagement with the technology.

Amazon Alexa Accessibility Features Analysis

Amazon has developed numerous accessibility features across its Echo device family, focusing on creating alternatives to traditional voice interaction while maintaining the core benefits of hands-free control. These features address specific barriers faced by users with different types of disabilities.

Eye Gaze Control and Motor Accessibility

Amazon introduced Eye Gaze on Alexa as their first feature designed specifically for customers with mobility or speech impairments. Available on Fire Max 11 tablets at no additional cost, this feature allows users to control Alexa using only their eyes. The system works by displaying customizable tiles on the tablet screen that users can activate through eye movements.

Caregivers can customize Eye Gaze dashboards with different Alexa actions, colors, and icons to match individual user needs. This adaptability makes the feature valuable for people with varying levels of motor function or cognitive abilities. The tiles can include common actions like playing music, turning on lights, or accessing information, providing essential independence for users who cannot use traditional input methods.

The implementation of eye-tracking technology represents a significant advancement in making voice technology accessible to users with severe motor limitations. Users who might previously have required extensive assistance can now independently control their smart home environments and access digital services. This capability particularly benefits people who use wheelchairs or have conditions that affect fine motor control.

Eye Gaze on Alexa works alongside other accessibility features, creating multiple pathways for interaction. Users can combine eye control with voice commands when possible, or rely entirely on eye movements when speech is not feasible. This flexibility accommodates fluctuating abilities that some users experience due to progressive conditions or temporary impairments.

Speech Alternative Features

Amazon recognized that not all users can interact with voice assistants through speech, leading to the development of several alternative input methods. Tap to Alexa enables customers to interact with Alexa through touch instead of voice on Echo Show and select Fire tablet devices. Users can access weather information, news, timers, and other features by tapping the touchscreen rather than speaking commands.

Real Time Text (RTT) adds live chat functionality during Alexa calls and Drop Ins made from Echo Show devices. When activated, a keyboard appears on screen, allowing users to type text that appears in real time on both parties’ screens. This feature proves particularly valuable for users with speech impairments or those who are deaf or hard of hearing, enabling communication without relying on voice interaction.

The Text to Speech function allows users to type phrases on their Fire tablet and have them spoken aloud. This capability helps people with speech disabilities or unique voice patterns communicate more effectively. The feature also benefits nonverbal or nonspeaking individuals who need to communicate with others in their environment.

Tap to Alexa can be used with compatible Bluetooth switches, expanding accessibility for individuals with limited mobility. This integration allows users to navigate and interact with their Fire tablet using external switch devices, accommodating various physical abilities and preferences. The combination of touch and switch access creates multiple pathways for users to access Alexa’s functionality.

Visual Accessibility Support

Amazon has integrated several features specifically designed for users with visual impairments or blindness. Show and Tell helps people identify common packaged food items that are difficult to distinguish by touch. Users can hold items in front of an Echo Show’s camera, and Alexa will identify products like canned or boxed foods, providing independence in kitchen tasks and grocery organization.

VoiceView Screen Reader enables people who are blind or have low vision to use gestures to navigate Echo Show devices while VoiceView reads aloud the actions performed on screen. This feature works similarly to screen readers on other devices, providing audio descriptions of visual elements and allowing navigation through touch gestures.

Call Captioning provides near real-time captions for Alexa calls, helping users who are deaf or hard of hearing follow conversations. The feature automatically generates text from speech during calls, displaying captions on Echo Show screens. While accuracy may vary depending on speaker clarity and background noise, the feature provides valuable support for understanding spoken content.

Alexa Captioning displays captions for Alexa’s responses on Echo Show devices, ensuring that users can see what Alexa is saying in addition to hearing it. This redundancy helps users with hearing impairments while also benefiting anyone in noisy environments or situations where audio may be unclear.

Google Assistant Accessibility Capabilities

Google has approached voice accessibility through deep integration with Android’s existing accessibility infrastructure and development of specialized features for different user needs. Their strategy focuses on seamless integration with assistive technologies already used by people with disabilities.

Android Integration Benefits

Google Assistant benefits from tight integration with Android’s accessibility features, creating a more cohesive experience for users already familiar with Android assistive technologies. TalkBack, Google’s built-in screen reader, works seamlessly with voice commands, allowing users to combine voice interaction with screen reader functionality as needed.

The integration extends to other Android accessibility features like Voice Access, which allows users to control their devices with spoken commands. Users can open apps, navigate screens, type, and edit text hands-free, with Google Assistant providing additional voice-controlled functionality on top of these core features. This layered approach creates multiple ways for users to accomplish tasks based on their preferences and abilities.

Switch Access connectivity allows users to connect external switches or keyboards to Android devices instead of interacting directly with touchscreens. When combined with Google Assistant voice commands, users gain multiple input options that can be mixed and matched based on their current situation or abilities. This flexibility proves particularly valuable for users whose abilities may fluctuate throughout the day.

Camera Switches turn Android’s front-facing camera into a switch system, allowing navigation through eye movements and facial gestures. Similar to Amazon’s Eye Gaze feature, this capability provides hands-free device control for users with motor impairments. The integration with Google Assistant voice commands creates additional pathways for interaction.

Multimodal Interaction Support

Google has developed multimodal interaction patterns that combine voice with other input methods effectively. These systems provide redundancy and flexibility that improve accessibility for all users by ensuring multiple ways to accomplish the same tasks. Users can interact through voice, touch, gesture, or keyboard input based on their current situation and abilities.

The integration of voice assistants with visual systems creates more accessible environments for users with various disabilities. Voice commands can control household functions while visual confirmations ensure users understand what actions have been completed. This approach particularly benefits users with partial hearing or speech impairments who may need multiple ways to confirm system responses.

Live Transcribe provides real-time speech-to-text transcriptions for over 70 languages and dialects. This feature helps users who are deaf or hard of hearing follow conversations and audio content. When combined with Google Assistant voice commands, users can access both speech-to-text and text-to-speech functionality as needed.

Sound Notifications alert users to specific environmental sounds like smoke alarms, crying babies, or doorbells. This feature helps users with hearing impairments stay aware of important audio cues in their environment. Google Assistant can provide additional context or take actions based on these detected sounds.

Language and Speech Recognition

Google Assistant supports diverse speech patterns and accents more effectively than many competing platforms. The system can adapt to speech patterns that may be affected by physical disabilities, ensuring accurate interpretation of commands from users with various speech characteristics. This adaptability proves particularly important for users whose speech may be affected by conditions like cerebral palsy, stroke, or ALS.

Project Relate represents Google’s specialized effort to support users with nonstandard speech patterns. The Android beta app provides tools for easier communication for people with speech impairments. Users record themselves saying 500 phrases to train the app to recognize their particular speech patterns, enabling more accurate voice recognition.

The app includes a “Listen” feature that transcribes users’ speech to text, allowing them to show the text to others who may have difficulty understanding their speech. A “Repeat” feature uses synthesized voice to repeat what users are trying to say, providing alternative communication methods. These features work alongside Google Assistant to provide multiple communication pathways.

Google’s approach to speech recognition emphasizes learning from interactions to improve understanding over time. Machine learning algorithms adapt to different speech patterns and commands, becoming more accurate as they process more examples from individual users. This personalized learning particularly benefits users with speech impairments or unique vocal characteristics.

Comparing Voice Recognition Accuracy

Voice recognition accuracy varies significantly between platforms and depends heavily on factors like speaker characteristics, background noise, and technical terminology usage. Understanding these differences helps users and organizations make informed decisions about which platform best meets their accessibility needs.

Amazon’s automatic captioning system delivers approximately 80% accuracy under ideal conditions. While this percentage may seem high, it means one in five words could be incorrect, potentially creating barriers to understanding during technical discussions or important communications. Several factors affect accuracy, including speaker voice characteristics, background noise levels, speaking pace, and use of technical terminology.

Lower-pitched voices typically achieve better accuracy than higher-pitched voices across most voice recognition systems. This pattern can create accessibility barriers for users whose natural voice characteristics don’t align with optimal recognition parameters. Background noise significantly reduces accuracy even at minor levels, affecting users in many real-world environments.

Google Assistant’s recognition accuracy has improved significantly through integration with Google’s broader speech recognition infrastructure. The platform benefits from extensive data about how people with disabilities actually use technology, providing more practical recommendations rather than theoretical compliance guidance. This real-world usage data helps improve recognition for diverse speech patterns.

Testing voice interfaces requires special consideration of various ambient noise conditions and users with different speech patterns. Organizations implementing voice technology should test with diverse speakers, including those with speech impairments or strong accents. This testing approach ensures that voice interfaces work effectively for intended users rather than just ideal conditions.

The integration of AI tools in accessibility testing has shown promise for improving voice recognition accuracy assessment. AI-powered solutions can analyze voice interaction patterns and identify potential barriers before deployment. However, these tools work best when combined with human expertise rather than replacing manual testing entirely.

Integration with Assistive Technologies

The effectiveness of voice assistants often depends on how well they integrate with existing assistive technologies that users already rely on. Both Amazon and Google have taken different approaches to ensuring compatibility with screen readers, switch devices, and other assistive tools.

Amazon’s APL (Alexa Presentation Language) includes built-in support for screen readers and other assistive technologies. When developers build skills using APL, they can provide information about visual elements that screen readers can access. This integration allows users with visual impairments to interact with both voice and visual elements of Alexa skills effectively.

The APL accessibility features include support for custom voice interaction models that expose all skill functionality through voice intents. This approach makes skills accessible to both users with disabilities and users on devices without screens. The system allows developers to provide alternative access methods while maintaining full functionality.

Google’s approach emphasizes integration with Android’s existing accessibility ecosystem rather than creating separate accessibility features. This strategy means users can leverage assistive technologies they already know while adding voice functionality. TalkBack integration allows screen reader users to combine familiar navigation methods with voice commands as appropriate.

Voice Access integration provides comprehensive device control through spoken commands, working alongside other assistive technologies. Users can combine Voice Access commands with switch access, screen readers, or other tools based on their needs. This layered approach creates redundant pathways for accessing functionality.

Switch Access compatibility extends to both platforms, allowing users to connect external switches for device control. Amazon’s Tap to Alexa works with compatible Bluetooth switches, while Google’s Switch Access integrates directly with Google Assistant voice commands. These integrations provide multiple input options for users with motor impairments.

Mobile Accessibility Differences

Mobile accessibility varies significantly between Amazon and Google’s platforms, reflecting their different approaches to device integration and user interface design. These differences affect how users access voice features and interact with accessibility tools on mobile devices.

Amazon’s mobile accessibility focuses primarily on Fire tablet integration rather than broader mobile platform support. The Fire tablet ecosystem includes features like Eye Gaze on Alexa and Text to Speech functionality. However, these specialized features remain limited to Amazon’s hardware ecosystem rather than extending to other mobile devices.

The Alexa mobile app provides some accessibility features but lacks the deep integration found on dedicated Amazon devices. Users can access basic voice functionality and manage their smart home devices, but advanced accessibility features like Eye Gaze or comprehensive switch support require Amazon hardware. This limitation affects users who prefer or require other mobile platforms.

Google Assistant mobile accessibility benefits from deep Android integration, providing more consistent experiences across different mobile devices. The same accessibility features available on Android phones work with Google Assistant voice commands, creating familiar interaction patterns for users already using Android assistive technologies.

Android’s accessibility features like TalkBack, Voice Access, and Switch Access work seamlessly with Google Assistant across different mobile devices. Users don’t need to learn separate accessibility systems for voice interaction versus general mobile use. This consistency reduces cognitive load and provides more predictable experiences.

Camera-based accessibility features differ significantly between platforms. Amazon’s Show and Tell requires Echo Show devices for visual recognition, while Google’s Lookout feature works on standard Android phones to identify surroundings and nearby objects. This difference affects users who rely on visual recognition assistance for daily tasks.

Smart Home Control for Independent Living

Voice assistants have transformed smart home accessibility by providing hands-free control over environmental systems. This capability particularly benefits users with motor impairments who may have difficulty reaching physical controls or operating traditional interfaces.

Amazon’s smart home integration allows comprehensive environmental control through voice commands. Users can control lighting, thermostats, security systems, and appliances without physical interaction. For people with mobility impairments, this capability provides significant independence in managing their living environment safely and effectively.

The integration of voice assistants with smart home devices creates additional accessibility layers for visually impaired users. Voice commands can control various household functions while reducing reliance on physical controls that may be difficult to locate or operate. Enhanced safety comes from the ability to control lighting, locks, and emergency systems through voice commands.

Eye Gaze on Alexa extends smart home control to users who cannot use voice commands effectively. The visual interface allows control of connected devices through eye movements, providing independence for users with speech impairments or conditions affecting vocal function. This capability proves particularly valuable for users with ALS or other progressive conditions.

Google Assistant smart home integration benefits from Android device ubiquity, allowing users to control their environments from familiar mobile devices. The deep integration with Android accessibility features means users can combine smart home control with existing assistive technologies. Voice commands work alongside screen readers, switch access, and other tools users already employ.

Customization options vary between platforms, affecting how well smart home systems accommodate individual user needs. Amazon’s approach allows extensive customization of voice commands and device groupings. Google’s system emphasizes learning user patterns and adapting responses based on usage data and preferences.

Implementation Considerations for Organizations

Organizations considering voice interface implementation for accessibility purposes must evaluate several factors beyond basic functionality. These considerations affect both user adoption and long-term success of accessibility initiatives.

Testing voice interfaces requires diverse user input rather than standard quality assurance approaches. Organizations should include users with various speech patterns, including those with speech impairments or strong accents, in testing processes. This inclusive testing approach identifies barriers that might not appear with typical user testing methods.

Clear recovery paths become essential when voice commands aren’t recognized properly. Organizations must design systems that provide alternatives when voice recognition fails, ensuring users can complete tasks through multiple pathways. This redundancy particularly benefits users whose speech patterns may not align with standard recognition algorithms.

WCAG compliance considerations apply to voice interfaces even though they may not seem obviously related to traditional web accessibility. Voice interfaces must be operable through multiple input methods and provide equivalent functionality for users who cannot use voice commands. Organizations must ensure critical functions have non-voice alternatives available.

Staff training requirements extend beyond technical implementation to include awareness of disability-related communication needs. Support staff should understand how to assist users with different types of disabilities who may experience voice interface barriers. This training includes recognizing when alternative support methods may be more appropriate.

Cost considerations include both initial implementation and ongoing maintenance of accessibility features. Some platforms require specific hardware for advanced accessibility features, while others work with existing devices. Organizations must evaluate total cost of ownership including training, support, and hardware requirements when choosing voice platforms.

Testing Voice Interfaces for Accessibility

Effective testing of voice interfaces for accessibility requires specialized approaches that go beyond standard usability testing. Organizations must evaluate how well voice systems work for users with various disabilities under real-world conditions.

Manual testing approaches should include users with different types of speech patterns and disabilities. Testing exclusively with typical speakers misses barriers that affect intended users. Organizations should recruit test participants who represent their actual user base, including people with speech impairments, motor disabilities, and cognitive differences.

Automated testing tools can identify some voice interface barriers but cannot replace human testing for accessibility. AI-powered solutions may detect technical issues like missing alternative input methods or poor error handling. However, these tools cannot assess whether voice interactions feel natural or appropriate for users with disabilities.

Ambient noise testing proves crucial since voice interfaces often fail in realistic environmental conditions. Testing should include various background noise levels, multiple speakers, and different acoustic environments. Many accessibility barriers only appear when voice recognition accuracy decreases due to environmental factors.

Documentation requirements for voice interface accessibility should include clear descriptions of alternative input methods and recovery procedures. Users need to understand what options exist when voice commands don’t work as expected. This documentation should be available in multiple formats including audio descriptions and easy-to-read text.

Integration testing with existing assistive technologies requires specialized expertise and equipment. Organizations should test how voice interfaces work with screen readers, switch devices, and other tools their users already employ. This testing ensures voice features enhance rather than interfere with established accessibility workflows.

Performance and Reliability Differences

The reliability of voice interfaces directly affects their usefulness as accessibility tools. Users with disabilities often depend on these systems for essential daily tasks, making consistent performance crucial for independence and safety.

Amazon Alexa demonstrates strong performance in controlled environments but may experience accuracy issues with users who have speech impairments. The system works well for users with clear speech patterns but requires additional training or alternative input methods for users with conditions affecting vocal clarity. This limitation affects the platform’s usefulness for some potential users.

Google Assistant’s performance benefits from extensive machine learning data but still requires individual adaptation for users with nonstandard speech. Project Relate addresses this limitation by allowing personalized training, but the setup process requires significant time investment. Users must record 500 phrases to train the system effectively, which may be challenging for some individuals.

Environmental factors affect both platforms similarly, with background noise reducing accuracy significantly. Neither platform performs well in noisy environments, which limits their usefulness in many real-world situations. Users in busy households, care facilities, or urban environments may experience reduced reliability.

Response time consistency varies between platforms and affects user experience, particularly for users with cognitive disabilities who may need predictable interaction patterns. Faster, more consistent responses reduce cognitive load and make voice interfaces more accessible to users who process information differently.

Error recovery mechanisms differ significantly between platforms in ways that affect accessibility. Effective error handling provides clear alternatives when voice recognition fails and doesn’t require users to repeat commands exactly. Poor error handling creates barriers for users who may have difficulty adjusting their speech patterns or remembering specific command phrases.

Future Developments and Accessibility Impact

Voice interface technology continues evolving rapidly, with developments that could significantly improve accessibility for people with disabilities. Understanding these trends helps organizations and users prepare for upcoming changes.

Standardization efforts aim to create consistent voice command patterns across different platforms and devices. This development would reduce the learning burden for users with disabilities who currently must master different command sets for different systems. Standardized commands particularly benefit users with cognitive disabilities or memory challenges.

Multimodal integration continues expanding to combine voice with gesture, eye tracking, and other input methods. These developments create more flexible interaction options that accommodate varying abilities and environmental conditions. Users gain multiple pathways for accomplishing tasks based on their current capabilities and situation.

AI improvements in speech recognition show promise for better accuracy with diverse speech patterns. Machine learning advances may reduce the training time required for systems to understand users with speech impairments. These improvements could make voice interfaces more immediately useful for a broader range of users.

Integration with brain-computer interfaces represents a frontier that could revolutionize accessibility for users with severe motor impairments. While still experimental, these technologies might eventually provide direct neural control of voice interfaces. Such developments could benefit users who cannot use current input methods effectively.

Privacy and security considerations continue evolving as voice interfaces become more personal and collect more detailed user data. Organizations must balance personalization benefits with privacy protection, particularly for users with disabilities who may be more vulnerable to data misuse.

Using Automated Tools for Quick Insights (Accessibility-Test.org Scanner)

Automated testing tools provide a fast way to identify many common accessibility issues. They can quickly scan your website and point out problems that might be difficult for people with disabilities to overcome.

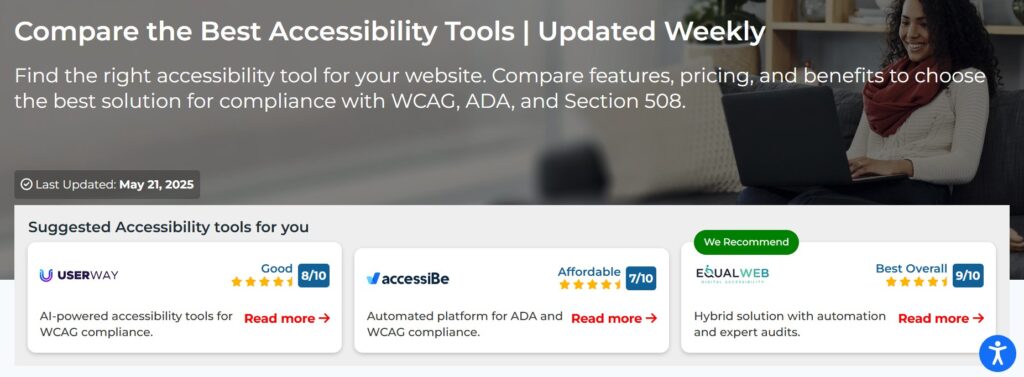

Visit Our Tools Comparison Page!

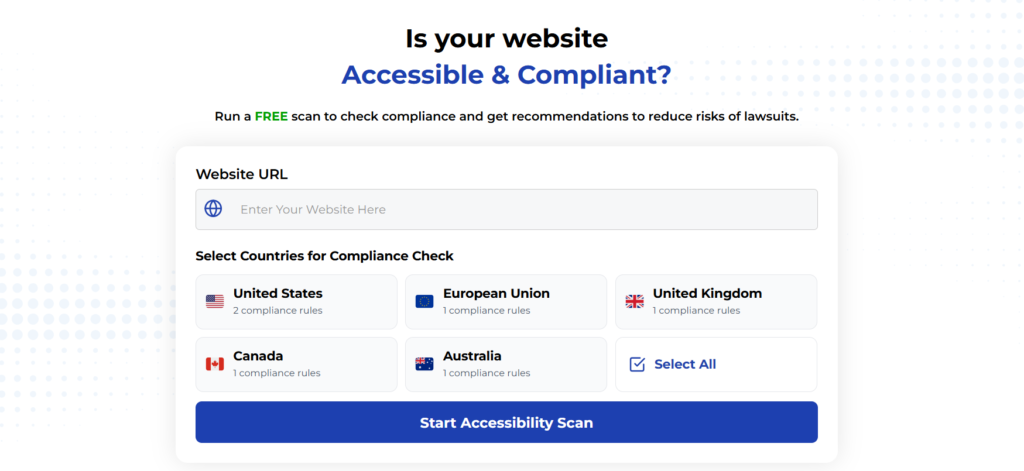

Run a FREE scan to check compliance and get recommendations to reduce risks of lawsuits

Final Thoughts

Amazon Alexa and Google Assistant each offer distinct advantages for users with disabilities, reflecting different philosophical approaches to voice accessibility. Amazon focuses on developing specialized features like Eye Gaze control and Show and Tell that address specific disability-related barriers. These innovations provide powerful solutions for users who need alternatives to traditional voice interaction, particularly those with motor impairments or visual disabilities.

Google Assistant emphasizes integration with existing Android accessibility infrastructure, creating seamless experiences for users already familiar with assistive technologies. This approach benefits users who prefer consistent interaction patterns across different applications and devices. The deep integration with TalkBack, Voice Access, and other Android features creates multiple pathways for accomplishing tasks.

The choice between platforms often depends on individual user needs, existing technology ecosystems, and specific disability-related requirements. Organizations implementing voice interfaces for accessibility should evaluate both platforms against their user base characteristics and consider testing with diverse users before making final decisions.

Both platforms continue evolving their accessibility features, suggesting that voice interfaces will become increasingly important tools for digital inclusion. The development of standardized voice commands, improved speech recognition accuracy, and better integration with assistive technologies promises to make voice interfaces more accessible to broader populations of users with disabilities. Success in voice interface accessibility requires ongoing testing with real users, clear alternative pathways when voice recognition fails, and integration with existing assistive technologies rather than replacement of proven accessibility tools. Organizations that approach voice implementation with these principles create more inclusive digital experiences that benefit all users while providing essential independence for people with disabilities.

Want More Help?

Try our free website accessibility scanner to identify heading structure issues and other accessibility problems on your site. Our tool provides clear recommendations for fixes that can be implemented quickly.

Join our community of developers committed to accessibility. Share your experiences, ask questions, and learn from others who are working to make the web more accessible.