Screen Reader Testing Protocols for QA Teams

Screen reader testing helps QA teams ensure websites and applications work correctly for users with visual impairments. When implemented properly, these testing protocols identify barriers that might prevent people from accessing digital content. This article offers practical steps for setting up testing workflows, selecting the right tools, and documenting results efficiently. By following these structured approaches, QA professionals can systematically evaluate digital products for screen reader compatibility, helping organizations meet accessibility requirements while serving all users effectively.

Essential Screen Reader Tools for Comprehensive Testing

Effective screen reader testing requires using multiple tools to ensure broad coverage across different platforms and user scenarios. Each screen reader interprets code differently, making it crucial to test with several options rather than relying on just one.

NVDA, JAWS, and VoiceOver Configuration for Testing

NVDA (NonVisual Desktop Access) is a popular open-source screen reader designed for users who are blind or have vision impairments. As a free tool, it serves as an excellent starting point for QA teams beginning their accessibility testing journey. To set up NVDA for testing:

- Download and install NVDA from the official website

- Configure speech settings to a comfortable speed (lower speeds are better for new testers)

- Learn essential keyboard shortcuts:

- Insert+N: Open NVDA menu

- Insert+F7: List all elements by type

- Insert+Space: Toggle browse/focus modes

- Ctrl: Stop reading

NVDA works particularly well when testing with Firefox and Chrome browsers. For accurate testing, ensure both the latest version and at least one older version are available to your team, as some users may not update their assistive technology regularly.

JAWS (Job Access With Speech) represents another widely-used screen reader, particularly in professional environments. While it requires a commercial license, JAWS offers some advanced features that make it valuable for thorough testing:

- Configure speech rate and verbosity settings through the JAWS settings center

- Use testing mode to capture detailed information about element properties

- Create testing scripts to automate repetitive testing sequences

VoiceOver, Apple’s built-in screen reader, must be included in your testing protocol for Mac and iOS testing. Setting up VoiceOver for testing involves:

- Enable VoiceOver through System Preferences > Accessibility or by pressing Command+F5

- Configure the VoiceOver Utility for testing-specific settings

- Practice using trackpad commander for testing touch interfaces

- Use VO+U to open the rotor for accessing heading lists and landmarks

Testing teams should establish baseline settings for each screen reader to ensure consistent test results across team members.

Screen Reader and Browser Compatibility Matrix

Creating a testing matrix helps teams organize their approach to screen reader testing across multiple environments. This structured approach ensures nothing gets overlooked while preventing duplicate testing efforts. A basic matrix should include:

| Screen Reader | Windows + Chrome | Windows + Firefox | Windows + Edge | macOS + Safari | macOS + Chrome | iOS | Android |

| NVDA | Primary | Secondary | Secondary | N/A | N/A | N/A | N/A |

| JAWS | Secondary | Primary | Secondary | N/A | N/A | N/A | N/A |

| VoiceOver | N/A | N/A | N/A | Primary | Secondary | Yes | N/A |

| TalkBack | N/A | N/A | N/A | N/A | N/A | N/A | Primary |

Mark combinations as “Primary” (must test), “Secondary” (test if resources allow), or “N/A” (not applicable). This prioritization helps teams focus their efforts effectively while ensuring adequate coverage.

The matrix should be updated quarterly to reflect new browser versions and screen reader updates. For example, if NVDA releases a major update, the team should add specific test cases to verify functionality with the new version.

Structured Testing Methodology for Screen Readers

A methodical approach to screen reader testing yields more consistent results than ad-hoc testing. This section outlines a structured process that QA teams can follow to ensure thorough evaluation of digital products.

Page Navigation and Content Comprehension Testing

The first phase of screen reader testing focuses on how users navigate through content. This includes testing whether users can understand the overall structure of pages and access information efficiently.

Start by testing the document structure. For screen reader users, proper page structure is essential for understanding complex content and layouts. Check that heading tags (H1-H6) follow a logical hierarchy and provide an accurate outline of the page content. Common issues to look for include:

- Missing heading tags on important sections

- Skipped heading levels (e.g., H1 followed directly by H3)

- Headings that don’t accurately describe their sections

- Decorative text formatted as headings

Test how screen readers announce page titles, which help users understand what page they’ve landed on. Each page should have a unique, descriptive title that clearly communicates its purpose.

Next, evaluate text content readability. Listen to how the screen reader pronounces specialized terms, acronyms, and numbers. Check that:

- Abbreviations and acronyms are properly marked up with the abbr element

- Phone numbers and dates are formatted to be read correctly

- Foreign language phrases are marked with appropriate lang attributes

- Technical terms are pronounced correctly or have pronunciation guidance

While testing navigation, verify that keyboard focus moves in a logical order through the page. Users should be able to tab through interactive elements in a sequence that matches the visual layout of the page.

Landmark Navigation Assessment

Landmarks provide important navigation shortcuts for screen reader users. Test the implementation of HTML5 landmark regions (header, nav, main, footer) and ARIA landmarks (role=”banner”, role=”navigation”, etc.).

Verify that:

- All major sections of the page have appropriate landmarks

- Landmarks are correctly nested according to specifications

- Multiple instances of the same landmark type have descriptive labels

- The main content area is properly marked with the main landmark

Test landmark navigation by using screen reader shortcuts:

- NVDA: Insert+F7, select landmarks

- JAWS: Insert+F3 for landmarks list

- VoiceOver: VO+U to open rotor, select landmarks

Count the number of keystrokes required to reach important content using landmark navigation compared to sequential navigation. Efficient landmark implementation should significantly reduce the number of keystrokes needed.

Interactive Element Testing with Screen Readers

Interactive elements require special attention during screen reader testing. These elements include links, buttons, form controls, and custom widgets that users interact with.

For links, verify that:

- Link text clearly indicates the destination or purpose

- Screen readers announce links as clickable elements

- Links with the same text but different destinations have additional context

- Links to PDFs or other document types announce the file type

Button testing should confirm that:

- Buttons have descriptive labels that indicate their action

- Screen readers recognize and announce custom buttons as buttons

- Icon-only buttons have appropriate accessible names

- Button states (disabled, pressed) are properly announced

Custom widgets and components require rigorous testing. For each custom widget:

- Verify appropriate ARIA roles are applied

- Test keyboard operability of all functions

- Confirm that state changes are announced

- Check that help text and instructions are accessible

Pay special attention to dynamic content that updates without page refreshes. Screen readers should announce:

- Toast messages and notifications

- Content added to the page through infinite scrolling

- Modal dialogs and their focus management

- Live regions with appropriate politeness settings

Form Completion and Error Recovery Pathways

Forms present unique challenges for screen reader users. Testing should verify that users can complete forms efficiently and recover from errors.

Test form field labels to ensure:

- All form controls have proper labels

- Labels are programmatically associated with their fields

- Required fields are clearly indicated

- Field purpose is clear (e.g., format expectations)

When testing error handling, check that:

- Error messages are announced automatically when they appear

- Error messages are linked to their corresponding fields

- Instructions for correction are clear and specific

- Focus moves to the first field with an error

Create test scenarios that simulate common user journeys through forms:

- Completing a form with all valid data

- Submitting with missing required fields

- Entering invalid data formats

- Recovering from validation errors

Time how long it takes to complete forms using only a screen reader and keyboard. Compare this to visual completion times to identify efficiency issues.

Documenting Screen Reader Testing Results

Thorough documentation of screen reader testing results helps teams track progress, prioritize fixes, and build institutional knowledge about accessibility.

Issue Classification by Impact Level

Not all screen reader issues have the same impact on users. Classifying issues by severity helps teams prioritize remediation efforts. Consider using these impact levels:

Critical Impact: The issue prevents screen reader users from completing essential tasks. Examples include:

- Forms that cannot be submitted using keyboard only

- Navigation menus that aren’t accessible via keyboard

- Content that’s completely invisible to screen readers

These issues require immediate attention and should block releases until resolved.

High Impact: The issue significantly hinders task completion but has workarounds. Examples include:

- Illogical focus order that makes navigation confusing

- Missing form labels that make fields difficult to identify

- Images with missing alt text that contain important information

High impact issues should be prioritized for the next release cycle.

Medium Impact: The issue causes inconvenience but doesn’t prevent task completion. Examples include:

- Redundant or verbose announcements

- Suboptimal landmark structure

- Minor focus management issues

Low Impact: The issue represents technical non-compliance but has minimal impact on actual usage. Examples include:

- Duplicate IDs that don’t affect functionality

- Minor pronunciation issues

- Decorative images with unnecessary alt text

For each issue, document:

- Impact level with justification

- Affected user groups

- Contexts where the issue occurs

- Screen readers and browsers where the issue was observed

Creating Reproducible Screen Reader Bug Reports

Bug reports for screen reader issues must contain sufficient detail for developers to understand and reproduce the problem. Effective bug reports include:

Environment Information:

- Screen reader name and version

- Browser name and version

- Operating system name and version

- Any relevant browser extensions or settings

- Screen reader settings (speech rate, verbosity level)

Step-by-Step Reproduction:

- Specific URL or starting point

- Exact keyboard commands used

- Expected screen reader announcement

- Actual screen reader announcement

- Timestamps for reference if recordings are available

Visual and Audio Evidence:

- Screenshots with focus indicators highlighted

- Screen recordings with screen reader audio

- Transcripts of screen reader output

- Code snippets showing problematic markup

Potential Solutions:

- References to similar resolved issues

- Links to relevant WCAG success criteria

- Suggested code fixes if known

- Links to design patterns that address the issue

Sample bug report format:

Bug: Form error messages not announced by screen reader

Priority: High Impact

Environment: NVDA 2023.1, Firefox 115, Windows 11

Steps to Reproduce:

1. Navigate to [form URL]

2. Tab to the email field

3. Enter “invalid-email” (without quotes)

4. Press Tab to move to the next field

5. Submit the form using the Submit button

Expected Behavior: Screen reader announces error message “Please enter a valid email address”

Actual Behavior: Focus moves to email field but error message is not announced

Evidence: Recording attached showing silent focus return

Suggested Fix: Implement aria-live=”assertive” on the error container and ensure error messages are injected into the DOM after form submission

When multiple team members test the same functionality, compare results across different screen readers to identify patterns. Some issues may only appear in specific screen reader/browser combinations.

Building Screen Reader Testing into Your QA Workflow

Integrating screen reader testing into existing QA processes makes accessibility testing sustainable rather than a one-time effort.

Automated vs. Manual Screen Reader Testing

While automated tools can identify many potential accessibility issues, they cannot replace manual screen reader testing. Automated tools excel at finding technical violations like missing alt text or improper heading structure, but they cannot evaluate the actual user experience.

The limitations of automated tools include:

- Inability to assess the quality of alt text (only its presence)

- Limited evaluation of custom widget functionality

- No evaluation of logical reading order or focus order

- Cannot detect all ARIA implementation issues

Establish a balanced approach:

- Use automated tools for initial scanning and regression testing

- Follow up with manual screen reader testing for user experience issues

- Combine results from both methods for complete coverage

Incorporating User Feedback in Testing Protocols

Screen reader testing becomes more effective when informed by real user data. Use analytics and user feedback to guide your testing efforts:

- Analyze which screen readers your actual users employ

- Focus testing on common user paths and critical functionality

- Recruit screen reader users for usability testing sessions

- Document and prioritize issues reported by actual users

Consider implementing a feedback mechanism specifically for accessibility issues, allowing users to report problems they encounter in real-world usage.

Training QA Teams for Effective Screen Reader Testing

Not every QA professional needs to become an accessibility expert, but all should have basic screen reader testing skills. Develop a training program that includes:

- Screen reader basics (installation and keyboard commands)

- Common testing scenarios with examples

- Proper documentation techniques

- Troubleshooting common screen reader issues

Create reference materials including:

- Cheat sheets for keyboard commands

- Testing checklists for common components

- Video demonstrations of testing techniques

- Sample bug reports for reference

Schedule regular practice sessions where team members can improve their screen reader skills in a supportive environment. Consider assigning accessibility champions who can develop deeper expertise and support other team members.

Using Automated Tools for Quick Insights (Accessibility-Test.org Scanner)

Automated testing tools provide a fast way to identify many common accessibility issues. They can quickly scan your website and point out problems that might be difficult for people with disabilities to overcome.

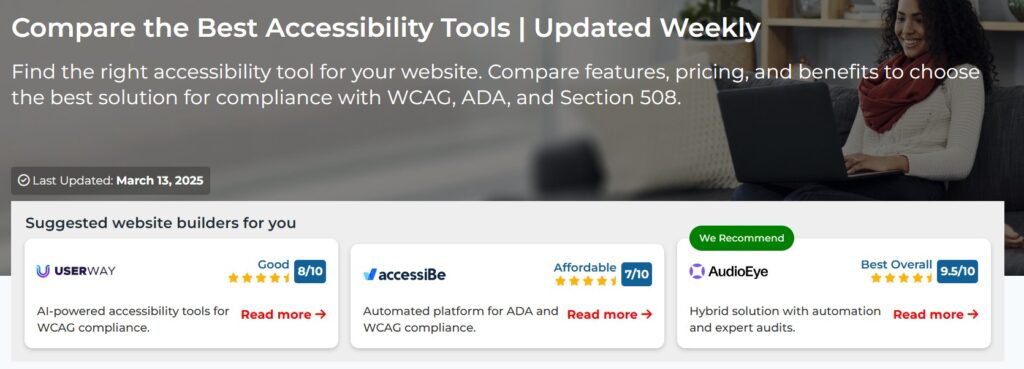

Visit Our Tools Comparison Page!

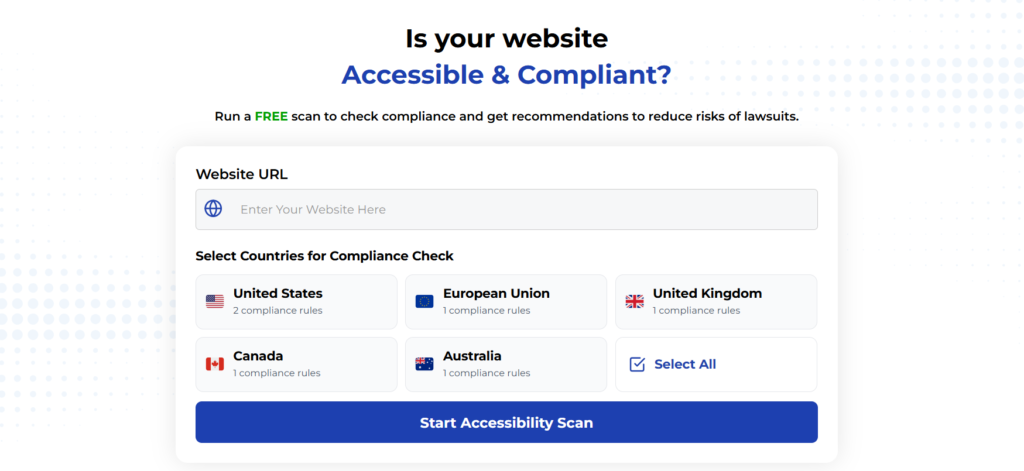

Run a FREE scan to check compliance and get recommendations to reduce risks of lawsuits

Establishing effective screen reader testing protocols enables QA teams to identify and address accessibility barriers systematically. By using a structured approach that includes testing with multiple screen readers, following methodical testing procedures, and documenting results thoroughly, teams can ensure digital products work well for all users, including those who rely on screen readers.

The key to successful screen reader testing lies in making it a regular part of the QA process rather than a separate initiative. When accessibility testing is integrated into existing workflows and supported with proper training and resources, it becomes a natural part of ensuring product quality.

Remember that screen reader testing is not just about compliance—it’s about creating digital experiences that work for everyone. By implementing the protocols outlined in this article, QA teams can play a crucial role in making the web more accessible to all users.

Run a FREE scan to check compliance and get recommendations to reduce risks of lawsuits.